Dashboard

Dashboard overview and settings

Contents

Overview

The dashboard is designed to help you easily deploy new applications and versions, manage the existing ones, and review your current deployments at a glance. This page in the quickstart guide describes the hierarchy of SPS entities and how they relate to your UE project, we recommend you read it first if you are unfamiliar with what applications, versions, and instances on SPS represent.

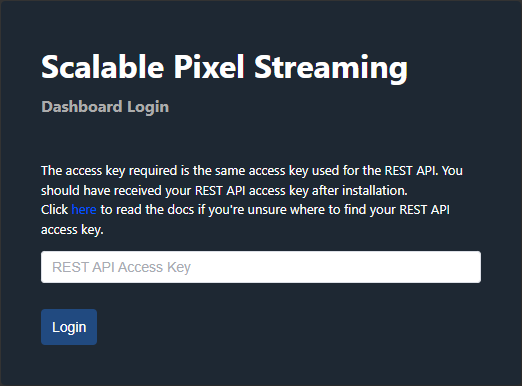

Logging in to your dashboard

Your Scalable Pixel Streaming cloud installation will list your dashboard URL and access key as outputs in the configuration of your stack after SPS installation is complete. Navigate to the provided dashboard URL and enter the REST API Access Key into the input field to log in:

The steps below will help you find these outputs in your cloud installation:

On CoreWeave

- Log in to the CoreWeave Cloud;

- Select

Applicationsin the menu on the left; - Select the deployed

Scalable Pixel Streamingapplication; - Find the dashboard URL ending in

/dashboardin the table labeledAccess URLs; - The

Application Secretssection lists the access key. You can click the eye icon to reveal the key, or the clipboard icon to copy it.

On AWS

- Log in to the AWS Management Console and follow the prompts (see AWS Management Console Documentation);

- Type

cloudformationin the search field and select theCloudFormationoption to view your stacks; - Select the

<Stack Name>that you created when installing Scalable Pixel Streaming; - Navigate to the

Outputstab; - Find the dashboard URL and REST API access key on this page.

Dashboard

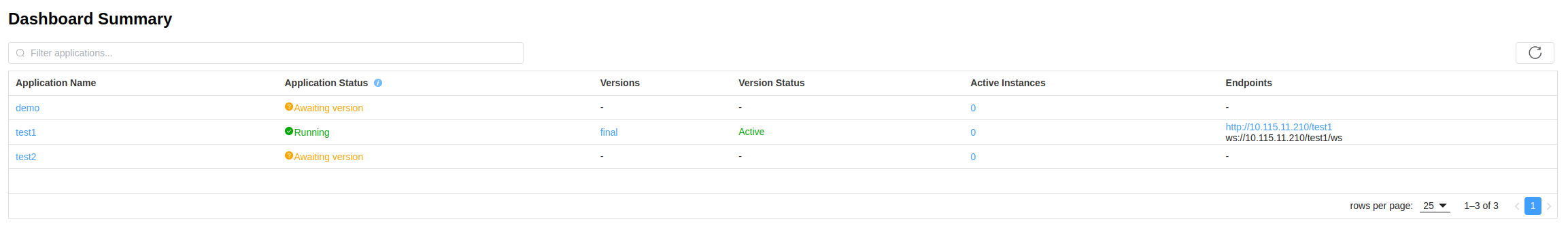

The dashboard summary table provides an insight into the most important information on the cluster:

- The list of your SPS applications currently running or waiting on your cluster;

- Information about the versions assigned to each application and their status;

- Endpoints for each application used to launch its instances and the number of currently active instances for each application;

- Easy access to the listed application and version details via hyperlinks.

The running instances stats panel shows a live view of the total number of active, pending, and terminating instances across all applications on the cluster.

The stats panel displays a live view of the total application and active version count across the entire cluster.

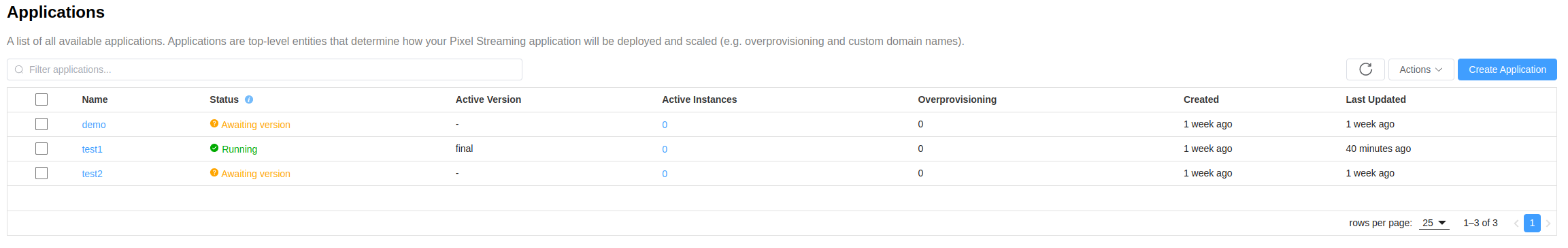

Applications

You can select one or more applications and interact with them via the Actions dropdown, where you can edit them, create new versions, mark them as inactive, duplicate, and delete them.

Read more about configuring and managing your applications here.

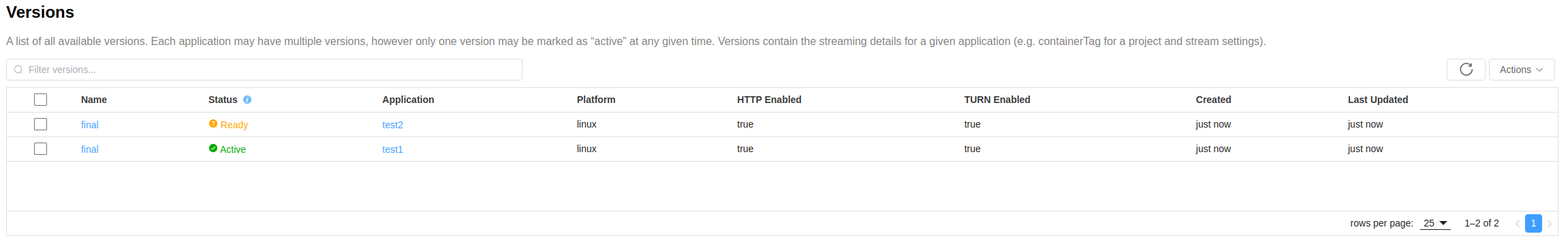

Versions

The versions summary table provides an insight into the status of all your versions, their parent applications, their platform, whether HTTP and TURN are enabled, as well as timestamps of version creation and last update. You can easily access any version or its parent details via the hyperlinks.

You can select one or more versions and interact with them via the Actions dropdown, where you can view their details or delete them.

Read more about configuring and managing your versions here.

Instances

The instances summary table provides live updates on all active instances currently running on the cluster. It lists the unique pod name of the instance, its status, the application it is created from, the platform it’s running on, whether it is using HTTP and TURN, how many CPUs and how much memory has been allocated to it, as well as timestamps of instance creation and last update.

You can select one or more instances and interact with them via the Actions dropdown, where you can view their details or delete them.

Read more about managing your instances here.

Settings

This menu contains the settings of your Scalable Pixel Streaming deployment on the current cloud formation.

General: View and adjust node size and number of instances per node for your CPUs, Linux GPUs, and Windows GPUs resources. These settings are global and will apply to each application.

Instances per node

Linux and Windows GPUs will allow you to configure Instance Per Node, also known as multitenancy, increasing this value beyond 1 will allow multiple instances to share a GPU.

Minimum group size

The minimum size of GPU nodes group will determine how many nodes are readily available across your SPS installation. These “ready” nodes will be available at all times and will be used up by instances of any applications running on the installation on the first come, first served basis. Once taken up, these nodes will only become available again if the instances seated in them get terminated.

Increasing the minimum GPU group size will increase the cost of your infrastructure since some nodes will be running at all times.

Maximum group size

The maximum size of GPU nodes group will determine how many nodes at most can be provisioned accross all applications, which in turn, determines how many instances will be able to run at the same time.

For example, with a multitenancy setting of 2 and a maximum size group of 5, the maximum number of instances that can be running at the same time is 10. If any further instances are created, they will be queued up and will be pending until resources free up.

Example GPU group configuration

Let’s look at a real-life example that ties these settings together.

- A user has set the minimum size of their Linux GPU nodes group to

2, has configured2instances per node, and maximum size of the group is3. - The user has two Linux applications

AandB, ready to serve their customer. - The customer spins up five instances of these applications in this order:

A1,A2,B1,B2,A3. - Instances

A1,A2,B1andB2will be immediately assigned the two “ready” nodes that they can share, so their spin-up time will be drastically reduced. InstanceA3doesn’t have a ready node, so it will need a new node provisioned to it from scratch, which means it will take longer to launch. The allocation will look like this:

| ReadyNode1: A1,A2 | ReadyNode2: B1,B2 | NewNode3: A3 |

- Once the node for instance

A3has been spun up, if a new instanceA4is created, it will share the already provisioned node with the instanceA3, which will reduce theA4spin-up time drastically as well:

| ReadyNode1: A1,A2 | ReadyNode2: B1,B2 | NewNode3: A3,A4 |

- Instances

A3andA4are then terminated. The node isn’t destroyed immediately and will persist for a preset amount of time (2 minutes on AWS). If an instanceA5is spun up within this period, it will occupy this node, which will reduce its spin-up time:

| ReadyNode1: A1,A2 | ReadyNode2: B1,B2 | NewNode3: A5 |

- Instance

B1is now terminated, freeing up a seat in the ReadyNode2. A new instanceA6is spun up. It now has two available nodes, both theReadyNode2andNewNode3. At this stage, Kubernetes will determine which node it will be assigned to, depending on the resource usage, resulting weighting, and score from the scheduler. Let’s say the scheduler assigns it toReadyNode2:

| ReadyNode1: A1,A2 | ReadyNode2: A6,B2 | NewNode3: A5 |

- A new instance

A7is requested and it will share the node withA5.

| ReadyNode1: A1,A2 | ReadyNode2: A6,B2 | NewNode3: A5,A7 |

- Two more instances are created:

A8andB3. They do not have any nodes available, as the maximum size group has been reached, so they will queue up until any of the previous instances are terminated. - All instances are now terminated. After a few minutes the

NewNode3will be destroyed, butReadyNode1andReadyNode2will persist awaiting new instances.

Overprovisioning

The number of nodes you would like reserved and ready to go at all times. This setting will drastically decrease instance spin-up times at the cost of running a number of nodes at all times. Read more about overprovisioning here.

Enabling overprovisioning will drastically increase your cloud infrastructure costs.

The key difference between minimum size of the node group and overprovisioning is that the former allows you to reserve a fixed number of nodes to be always ready. These nodes will be used up by instance on a first come first served basis and will not be replenished, meaning that they will only become available again when instances in them are terminated. This means that any new instances that do not have any availalbe ready nodes waiting for them will request a new node only when they are created, which will take time as the node gets provisioned on demand. Whereas, in case of overprovisioning, the framework will immediately spin up a new node as soon as one of the “ready” pre-provisioned nodes is occupied by an instance. This ensures that every new instance will have a ready node waiting for it.

Note: The framework is also running CPU nodes to host the control plane for each application, but they are scaled and managed automnatically.

Scaling limits

The following settings allow you to limit the number of replicas for various infrastructure components for each application.

Frontend Server: Specify a container image with your custom frontend as well as its scaling limits for each application.

Signalling Server: Specify the scaling limits of your signalling server for each application.

Authentication Plugin: Specify the scaling limits of authentication server replicas for each application.

Instance Manager Plugin: Specify the scaling limits of instance manager server replicas for each application.

REST API: Specify the scaling limits of REST API server replicas for each application.

Logout

Immediately logs you out and takes you back to the login screen.